ESM3 and the Future of Protein Language Models

Pure sequence learning is out, multiscale data is in

EvolutionaryScale, a team spun out of Meta’s AI research department, today released ESM3, the sequel to the hugely popular ESM2 protein language model that was released in 2022. On the heels of this model release announcement, EvolutionaryScale also announced that they had raised a staggering $142 million in their seed round. But what’s so different about ESM3 from previous iterations of protein language models? Does it warrant this level of hype?

To understand ESM3 and the surrounding hype, we’ll start with an overview of how protein language modeling has traditionally been done and use that as a springboard to see why ESM3 may represent a large step forward.

Note: If you’d like to learn more about protein language models before diving in here, check out my “Understanding Protein Language Models” series!

Protein Language Modeling Overview

The goal of protein language modeling is to understand the space of all possible proteins through training on sequence data. To that end, protein language models leverage the underlying architecture that powers all of the advances in natural language text — that is, the transformer.

Tokenization

Just as with text language models, protein language models operate on “tokens”, or discrete chunks that input sequences have been divided into. The space of all possible tokens is referred to as the vocabulary of the model. In natural language text this is a bit more difficult because different tokenization schemes can profoundly affect performance — if we chunk text at the level of letters, our vocabulary is likely far too small and our model will need much more data to learn. It will also be limited in the length of sequences it can read since each character will use up 1 token of its context window (which can be thought of as the model’s working memory). But if we chunk by letter, our token vocabulary will now be tens of thousands of words long and the model will again struggle to learn since it may only see rare words once or twice. For protein language models, on the other hand, we have a natural tokenization point at the level of amino acids! Amino acids are the organic compounds that act as the building blocks for proteins in organisms (in fact, DNA’s primary role is to provide instructions to each organism’s cells about protein production — what proteins should be produced, how many, and at what time). There are 22 distinct amino acids that are found somewhere in the genetic code of life (though only 20 are found in the human body), and these form the basis for any protein language model’s vocabulary.

Training Setup & Loss Function

Once we have a tokenization scheme, we need to train the model to perform our desired task. While text language models have focused squarely on generation, protein language models have instead focused on producing useful encodings of the target protein. To that end, they have typically used a bidirectional transformer with masked language modeling (MLM) loss rather than the unidirectional transformers with autoregressive loss. I’ll define these terms below:

Bidirectional - the transformer model can read a sequence both forwards and backward

Masked language modeling loss - we pick random tokens in the input sequence and hide them (or mask them) from the model. We then score the transformer model based on whether or not it can guess this hidden token given the rest of the input sequence (see the image above)

Unidirectional - the transformer can only read a sequence forwards. That is, future tokens cannot influence past tokens in an input sequence

Autoregressive loss - we hide the last token in a sequence and score the model based on its ability to predict that token using all of the previous tokens that

Protein language models, by using the bidirectional model with MLM loss setup, are able to reference both previous and future amino acids when generating a representation for a given amino acid in a sequence. This allows the model to learn with less training data since it is an easier task than the unidirectional autoregressive case. In addition, it allows the model to attend to amino acids that may be far apart in the amino acid sequence but actually very close together in the resulting 3D protein structure.

ESM2 Results

Given the above tokenization scheme and training setup, ESM2 (and other protein language models like it) was able to produce some pretty impressive results. The base model, which was only trained to identify missing amino acids in a protein sequence, could be finetuned to perform tasks like protein function prediction, protein-protein interactions, or protein structure prediction (as shown in the image above). It is able to perform these predictions very quickly when compared to its contemporary competitors such as AlphaFold2 due to the efficiency of the language modeling approach. However, by the same token, its accuracy in structure prediction was generally worse than AlphaFold’s.

Limitations of Pure Sequence Data

While ESM2 and other previous protein language models showed impressive results across several tasks, they have been fundamentally limited by their reliance on pure sequence data. This approach, while valuable, fails to capture the full complexity of biological systems and the hierarchical nature of protein interactions.

The key issue lies in the nature of DNA and protein data compared to natural language text. In language models trained on text, we observe a natural multiscale learning process. Text data contains paired instruction and answer data at multiple levels of abstraction, allowing models to learn tasks ranging from simple summarization to complex synthesis of multiple texts. For example, there may be a task-answer pair that says "Please summarize this passage of text", and then another that says "Please synthesize these summaries of different pieces of text into a single narrative". We move from one task (compressing text on a single topic into a summary) into a higher-level version of that same text (compressing multiple texts on multiple topics into a single summary). In other words, we're ascending a ladder of complexity in the types of instructions the LLM is learning to perform. This all happens naturally as part of the LLM training process because text is both the instruction and the answer. Or, to put it in the parlance of von Neumann computing, text is the program and it is the data (just as bits are both the program and the data in the von Neumann computer architecture).

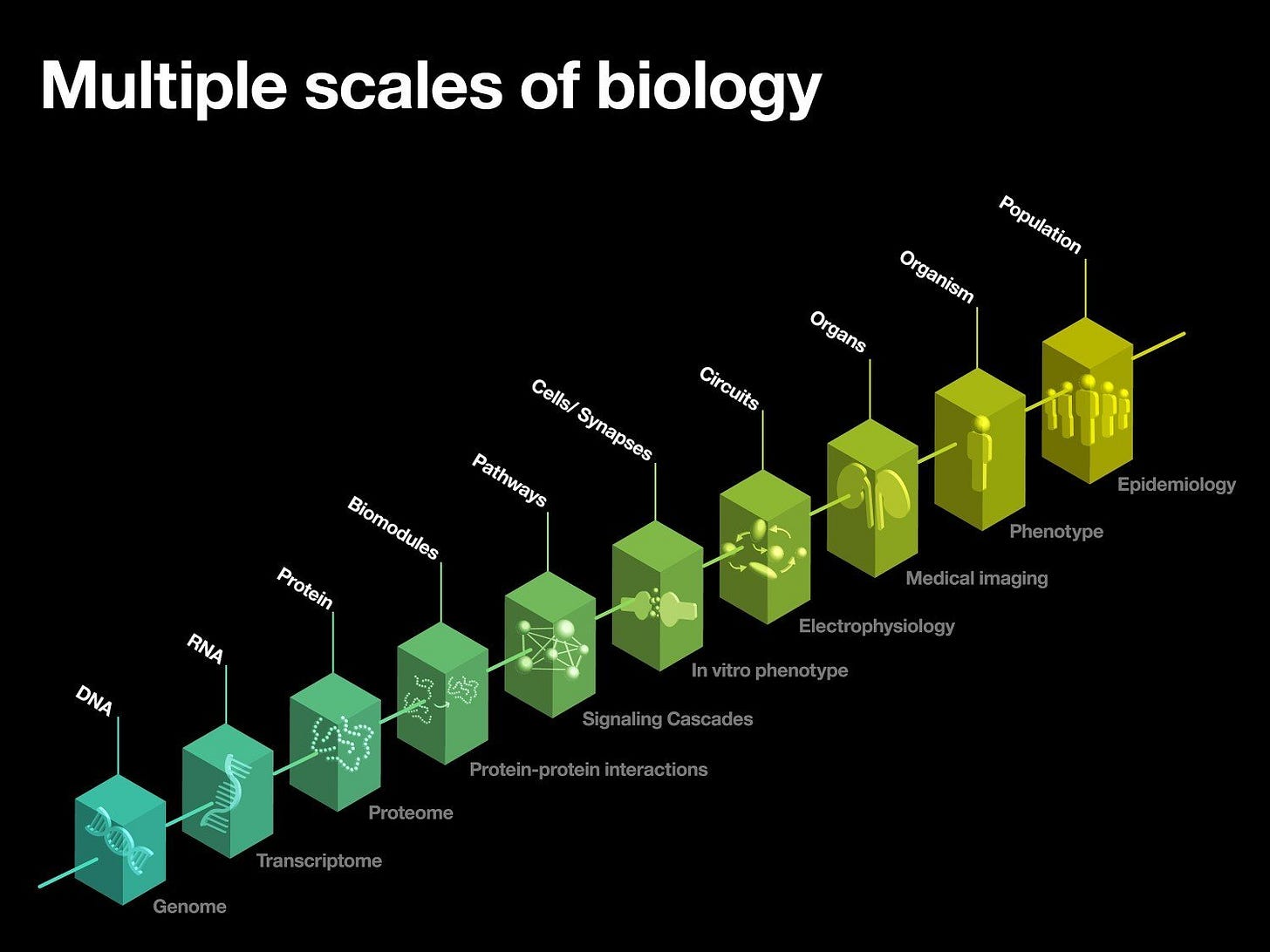

Now let us consider a DNA language model training on DNA sequence. We can think of DNA sequences as instructions that detail how to produce a protein (just as we looked at text instructions above). The protein can then itself be viewed as a higher-level instruction (namely, how should this molecule interact with other molecules in the body). These interactions can be seen as yet higher-level instructions for how to compose reaction pathways in the body. And so on up the chain.

The core idea here is that if we train on only DNA sequences, we see the instructions at an early stage without ever viewing the solution (the protein sequence & structure). Moreover, once we "complete" this instruction (DNA -> protein), to do anything useful we need to continue climbing the hierarchy (i.e. now our protein shape becomes the instruction and we use it to identify complexes & interactions). But our DNA language model never sees how to do this from its DNA sequence data and thus can never learn how to ascend the hierarchy. We don't get this natural multiscale learning the way we do in text because DNA is not universal. The modality changes as we move up the ladder of complexity, whereas text is always text, regardless of the complexity or level of granularity of the instruction-answer pair.

Hence, to make biological foundation models that replicate the success of LLMs in text, we need a way to encode all of the various modalities and learn them jointly in a single model. We also need to accelerate data collections efforts across these modalities rather than focusing purely on the lowest levels (i.e. DNA & protein sequencing).

ESM3 & Multiscale Data

ESM3 addresses this limitation by incorporating a form of multiscale data into its training process. Instead of focusing solely on amino acid sequence data as ESM2 did, it integrates:

Atomic coordinates: Providing information about protein structure

Sequence data: Offering the fundamental building blocks of proteins

Function data: Giving context to the protein's role in biological systems

All three of these modalities are tokenized and learned jointly by the model (see image above). That is, unlike ESM2 which only made predictions for a protein’s amino acid sequence, ESM2 learns to simultaneously make predictions about a given protein’s amino acid sequence, 3D structure, and high-level functional details. This multiscale approach allows ESM3 to jointly learn about proteins at multiple levels of abstraction:

Low-level: Understanding what sequence codes for a particular protein

Mid-level: Comprehending the protein's shape after folding

High-level: Grasping the protein's function(s) in nature

By learning to reason about proteins across these multiple scales, ESM3 will likely achieve significant performance improvements, particularly in generative tasks that require integration of knowledge from all three scales. The EvolutionaryScale team validated this with a case study in which they designed a new fluorescent protein that had never been seen before in nature (image below).

They did this by specifying high-level functional details, important protein structure requirements for fluorescence, and known amino acid sequences that code for those structural snippets. Given this conditioning data, the model was able to generate the remainder of the protein through reasoning about the constraints across all three scales of complexity: sequence, structure, and function.

Conclusion

Overall, ESM3 warrants the hype and signals a potential paradigm shift in the field of protein language modeling. It represents a first step in moving from an era focused on scaling up amino acid sequence data alone towards a focus more on the integration of diverse, multiscale data sources. This approach aligns more closely with the success of large language models in natural language processing, where the universality of text allows for seamless learning across various levels of complexity. By incorporating multiple modalities of biological data, ESM3 and its successors will be much better positioned to replicate this success in the biological domain.

Moving forward, this shift implies a need for accelerated data collection efforts across various biological modalities, rather than focusing solely on protein sequencing and structure determination. For ESM4 to truly serve as a foundation model for biology the way GPT4 has served as a foundation model for text, we will need to go beyond sequence, structure, and function to include reaction pathways, cellular expression levels, and more.

ESM3 is great! Before ESM3, SaProt has proposed combing AA+ discrete structural token for pre-training and shows that this helps scale to larger dataset.

SaProt: Protein Language Modeling with Structure-aware Vocabulary

Do you have any hypothesis on why a simple BERT-like structure for ESM performs way better than others? It sounds too simple almost