The race towards Artificial General Intelligence (AGI) and state-of-the-art AI models is often framed around breakthrough algorithms and novel architectures. However, a deeper analysis reveals that the true drivers of durable leadership lie elsewhere. While algorithmic innovation is crucial, the path to AI supremacy is increasingly paved with massive datasets and unparalleled computational power. When viewed through this lens, Google DeepMind emerges not just as a competitor, but as the likely frontrunner.

The Trifecta of AI Progress: Algorithms, Compute, and Data

Training large-scale AI models hinges on three interdependent pillars:

Algorithms: These are the recipes, the architectures (like Transformers, Mixture-of-Experts), and the training methodologies (loss functions, optimization techniques) that dictate how effectively models learn patterns and relationships from data. Efficient algorithms extract more "knowledge" per unit of data and compute.

Compute: This represents the raw processing power, typically measured in FLOPs (Floating Point Operations Per Second), required to execute the vast number of calculations involved in training deep neural networks. It's the energy input transforming potential into a trained artifact.

Data: This is the raw material – the text, images, code, audio, video, and other modalities – from which the model learns the structure of the world, language, and reasoning. The quality, quantity, and diversity of data fundamentally shape the model's capabilities.

These factors exhibit strong interplay. An algorithmic leap, like the transition from RNNs/LSTMs to Transformers for sequence modeling, unlocked the potential to effectively utilize vastly larger datasets and compute budgets. Before Transformers, training on web-scale text data with massive parameter counts often hit diminishing returns due to limitations in handling long-range dependencies and parallelization. The Transformer architecture, with its self-attention mechanism, was significantly more scalable, allowing marginal increases in data and compute to translate into tangible performance gains once more. The performance wasn't just better; the scaling properties improved.

The Illusion of Algorithmic Moats

Recent history is replete with examples emphasizing algorithmic prowess. The excitement around models like DeepSeek-R1, achieving remarkable performance with comparatively modest training resources, underscores the power of efficient architectures (like Mixture-of-Experts) and optimized training strategies. It proves that clever algorithms can significantly improve the compute/data-to-performance ratio.

However, as I argued previously in On Algorithmic Moats and the Path to AGI, algorithms alone do not constitute a sustainable competitive advantage in the current AI landscape. Why?

Talent Mobility: The AI research community is fluid. Top researchers frequently move between major labs like Google DeepMind, OpenAI, Anthropic, and Meta, carrying conceptual knowledge and insights about successful (and unsuccessful) architectural experiments and training techniques. While NDAs exist, the fundamental ideas diffuse rapidly.

Open Source and Publication: Key players like Meta (LLaMA series) and innovative teams like DeepSeek often open-source their models and research. Academic institutions and arXiv ensure rapid dissemination of novel techniques. This accelerates the entire field but levels the playing field algorithmically. A breakthrough published today can be replicated and built upon by competitors within months, if not weeks.

Therefore, relying solely on being the first to discover the next architectural tweak is a fragile strategy. Being a fast-follower, capable of rapidly implementing and scaling proven algorithmic advances discovered elsewhere, might be just as effective, provided you possess advantages in the other two factors.

The Real Moats: Data and Compute Scale

If algorithms are becoming increasingly commoditized, what provides a durable edge? The answer lies in the factors that are far harder to replicate: data and compute.

Why Scale Matters: The principle of scaling laws in deep learning empirically demonstrates that model performance often improves predictably, following a power law, as model size, dataset size, and training compute increase. While we've seen impressive results from smaller, efficient models, we are likely still far from the point of diminishing returns for many complex reasoning and multimodal tasks. Reaching the next plateau of AI capability will almost certainly require scaling data and compute far beyond current levels.

Why They Are Moats:

Non-Portability: Unlike algorithmic knowledge, engineers cannot easily take petabytes of proprietary, curated internal data or access to tens of thousands of specialized accelerators (like TPUs or GPUs) with them when they change jobs.

High Barrier to Entry: Building world-class compute infrastructure (data centers, custom silicon, high-speed interconnects) and accumulating diverse, high-quality datasets at the scale required represents billions of dollars in capital expenditure and years, often decades, of cumulative effort and investment. This is not something startups or even well-funded competitors can easily replicate overnight.

Synergistic Flywheels: Access to vast compute allows for more ambitious experiments and training larger models. These improved models, when deployed, can generate new, valuable interaction data, which feeds back into further model improvements, creating a virtuous cycle that is difficult for competitors with lesser resources to match.

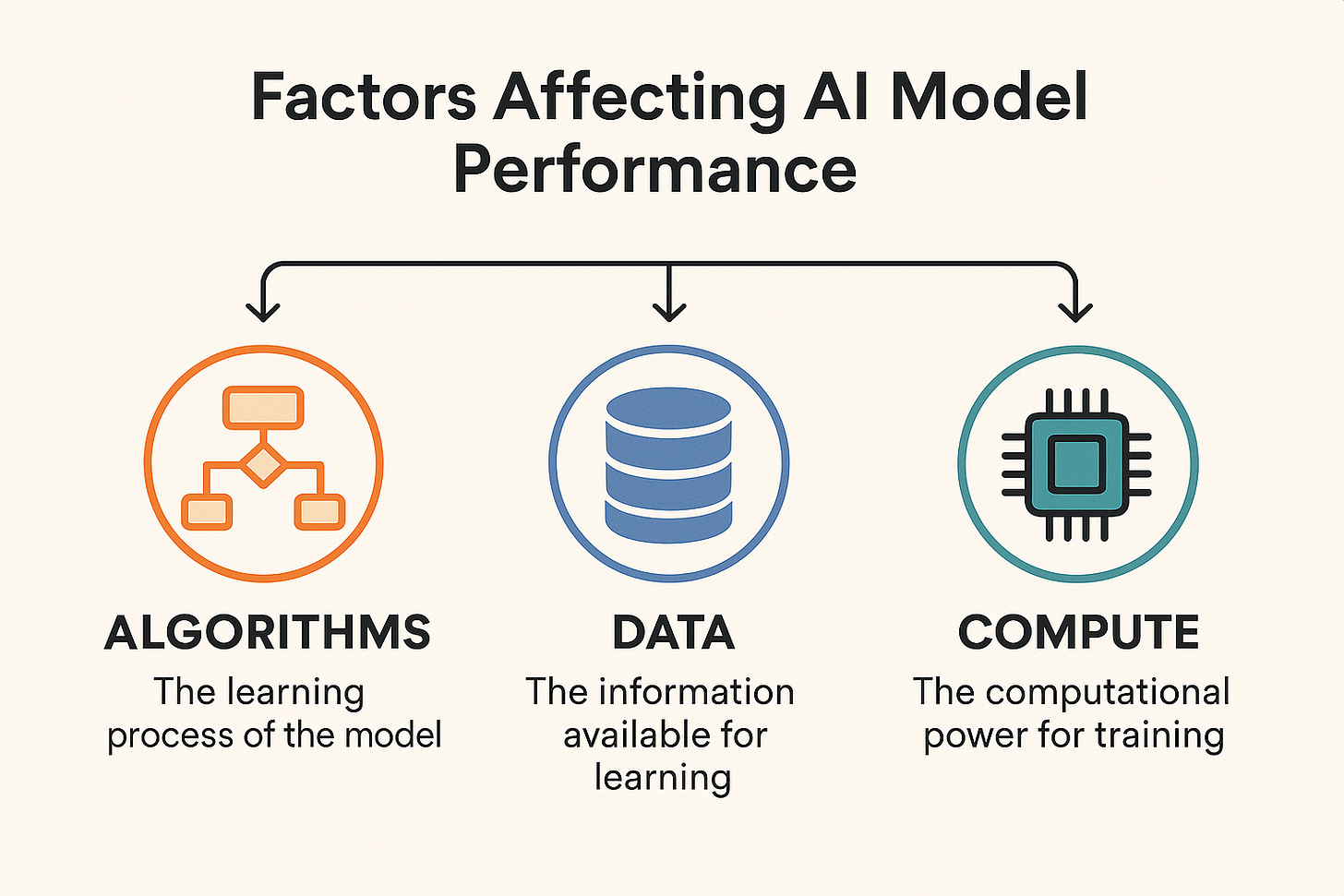

Gemini 2.5 Pro: A Glimpse of the Advantage

Gemini 2.5 Pro Experimental, recently release by Google, offers a glimpse into how these interacting factors of data and compute will lead to a durable advantage in Google’s AI model performance. Despite OpenAI and DeepSeek releasing highly performant thinking models months in advance of Google (representing a large lead in algorithmic innovations), Gemini 2.5 Pro has managed to score #1 across the board in Chatbot Arena and across a wide range of benchmarks.

While Google describes Gemini 2.5 Pro partly through algorithmic concepts like "thinking models," the sheer breadth and depth of its capabilities, validated by both benchmarks and human preference, strongly suggest that these algorithms are being scaled and refined using computational resources and data diversity that few, if any, competitors can match. The "significantly enhanced base model" (as described by Google) is almost certainly a product of larger parameter counts trained for longer durations on more diverse data, enabled by Google's vertical integration of hardware (TPUs) and software within their hyper-scale data centers.

Google's Unassailable Advantage

This brings us to Google. When assessing data and compute advantages, Google stands in a league of its own.

1. Data Dominance:

Breadth and Modality: Google possesses arguably the most diverse and extensive collection of multimodal data on the planet. Consider the sources:

Google Search: Billions of daily queries provide unparalleled insight into human intent, language variation, and real-time information needs (text, images, implicit semantics).

YouTube: The world's largest video platform offers vast amounts of video, audio, transcripts, comments, and multilingual content – crucial for multimodal understanding.

Android: Interaction data from billions of devices provides insights into user behavior, application usage, and sensor inputs (potentially anonymized and aggregated).

Google Maps: Geospatial data, satellite imagery, Street View imagery, reviews, and real-time traffic information.

Gmail, Docs, Workspace: While respecting user privacy is paramount, Google potentially has access (for internal R&D, aggregated/anonymized analysis, or opt-in features) to colossal amounts of text, code, and collaborative data reflecting professional and personal communication patterns.

Google Books: A massive corpus of digitized text spanning centuries.

Chrome: Web interaction data (aggregated and anonymized) reflecting how users navigate and consume information online.

Scale and Freshness: The sheer volume is staggering, but equally important is the constant influx of new data, keeping datasets fresh and reflecting current events, language evolution, and emerging trends. This continuous stream is vital for maintaining model relevance and accuracy.

2. Compute Superiority:

Custom Silicon (TPUs): Google made a strategic bet on custom AI accelerators years ago with its Tensor Processing Units (TPUs). Now in their 7th generation, TPUs are designed specifically for large-scale ML training and inference, offering potentially significant advantages in performance-per-watt and performance-per-dollar for Google's specific workloads and scale compared to general-purpose GPUs. This vertical integration allows hardware and software co-design for optimal efficiency.

Infrastructure Mastery: Google operates some of the world's most sophisticated and efficient data centers. Decades of experience in distributed systems (MapReduce, Borg/Kubernetes, Spanner) translate into an unparalleled ability to orchestrate and execute massively parallel training jobs reliably and efficiently across thousands of accelerators. This isn't just about owning chips; it's about the networking fabric, power delivery, cooling, and system software that make large-scale training feasible.

Capital Investment: Google has the financial resources to sustain and expand this infrastructure lead, continuously investing billions in data centers and next-generation TPUs.

Conclusion: The Inevitable Frontrunner?

While the AI race is far from over, and competitors like OpenAI and Anthropic continue to innovate, the fundamental dynamics favor players with entrenched advantages in data and compute. Algorithmic breakthroughs will continue to happen across the ecosystem, but they diffuse quickly. The ability to scale these algorithms using proprietary data and custom-built, hyper-scale infrastructure is the real differentiator.

Google's unparalleled data ecosystem, harvested across its diverse product portfolio, combined with its long-term investment in custom TPUs and mastery of planetary-scale computing, creates a formidable moat. Gemini 2.5 Pro is likely just an early indicator of what this integrated advantage can produce. As the demands for data and compute continue to escalate on the path to more capable AI, Google's lead in these foundational resources positions it strongly to outpace the competition and ultimately define the next era of artificial intelligence.